Measuring Developer Productivity

A mental framework for thinking about where to properly apply metrics

Last month, McKinsey published an article titled “Yes, you can measure software developer productivity” in which they claimed that they had a proprietary measuring framework for software developer productivity that over a dozen companies are already using. A number of people responded including Kent Beck and Gergely Orosz in a two part article (part 1 and part 2) that I highly recommend you read. In that article the authors start by offering a mental model of the software engineering cycle – effort-output-outcome-impact. They go on to explain how DORA and SPACE, two of the modern measurement frameworks that already exist, and McKinsey seems to ignore completely, focus on measuring outcome and impact or satisfaction, performance, activity, communication, and efficiency. Neither of which is focused on effort or output. However, in McKinsey’s system nearly every one of its custom metrics measure effort or output. Beck and Orosz highlight one of my favorite eponymous laws, Goodhart’s: “When a measure becomes a target, it ceases to be a good measure.” They wrap up by suggesting that engineering teams measure effort and output for “debugging issues”, measure outcomes in a “do it sensibly” manner and measure impact.

I wanted to write this article, not because I think I’m going to add a bunch of interesting insights into measuring developer productivity. Folks like the authors of the above-mentioned article and others like Abi Noda, CEO of DX, have spent a great deal of time thinking about this challenge. However, I’ve definitely had my fair share of arguments discussions with finance and other executives about this topic. Mostly I wanted to put a lot of other people’s thoughts into a framework. As I’ve mentioned before I like frameworks because they help me organize my thoughts and they are usually easy to remember. So, here goes.

The first question to arise is, why measure productivity at all? In some cases, companies measure other teams like sales or marketing, and thus the impetus is to be fair in measuring every team. In other cases, engineering is the majority of the company in terms of numbers and most certainly in terms of costs. Therefore, the company leadership wants to measure their most expensive investment. Either way, I don’t think you can rebut the argument for wanting to measure. The next set of questions is usually, what are they trying to really get at with the measurement? Stated another way, what is the question behind the question? Sometimes the company’s leadership wants to invest more but has no idea how much without understanding the ROI and therefore they want metrics to help with this. In other cases they might think they should be getting more impact out of engineering than they are. The reality is that no matter what ROI you have on your investment in engineering it is apples to oranges to compare them to any other team. The product, code base, customers, culture, and internal processes are all too different from any other company for a valid comparison. The other reality is that you cannot add more engineers to a team and not have a decrease in productivity, unless you actively work on the organization structures, tooling, and processes. We as an industry have known this since the publication of The Mythical Man Month in 1975. But this doesn’t mean the net benefit of a larger engineering organization is worse. Oftentimes you need to continue to grow your team if you want to continue to see top line growth. No matter what the motivation for the question, we are definitely in an era where engineering leadership needs to be prepared to measure productivity. If we don’t want someone declaring that KLOC is the thing to measure we need to have our own well thought out opinions so let’s start forming a mental model.

Software engineering is a craft, *clear throat* just as Code as Craft has been telling us for over a decade. This is similar to some other highly skilled professions such as painters and surgeons. The way I think about these is that each one allows for almost endless amounts of creativity in the actual process. Painters can use brushes, hands, and even trunks to paint, not to mention the myriad options of pigments and mediums. I don’t presume to know how a surgeon does their job but a quick Google search of how to tie sutures yields numerous articles and videos. What you might think is a solved problem, best knot for a suture, is still generating scholarly debate in modern times. Undoubtedly, surgery offers a lot of opportunities for applying creativity. An article in the Journal of Pediatric Surgery, Creativity and the surgeon, states, “...for an enthusiastic pediatric surgeon the pleasure derived from these activities is similar to that encountered when assembling a puzzle, playing chess, painting, performing music, or performing a flawless operative procedure.” As a consumer, what I care most about with regard to a surgeon, painter, or software developer, is the impact (health, sense of wonder, completion of a task) that the output (painting, surgery, software) has on me.

Impact matters most but it’s not that simple. I want my surgeon to have awesome impact stats. I want them to have super high survival rates, low complication rates, etc. But, obviously not all surgeons have the same speciality just like software engineers work in different parts of the app and in different code bases. Also, just as some surgeons take on riskier cases, some engineers take on the more difficult tasks in the more complex parts of the code. So we can’t compare them to one another. Surgeons also need nurses, anesthesiologists, and lots of other specialists or support personnel just like product engineers need enablement or platform engineers, site reliability engineers, data engineers, and lots of other folks to complete their tasks. So while impact matters most, it isn’t a very valuable comparison tool. Also, while that’s what I care about the most, it’s not all that I care about.

It’s pretty neat that an elephant can make a painting but no matter how beautiful I thought the art was, if the elephant was being mistreated to achieve that painting, I wouldn’t want anything to do with it. Similarly, I don’t want a software engineer to mistreat colleagues just to get something done. On teams that I’ve been part of, we’ve long abhorred the brilliant jerk. So, impact matters most but how you achieve that matters as well, within some constraints.

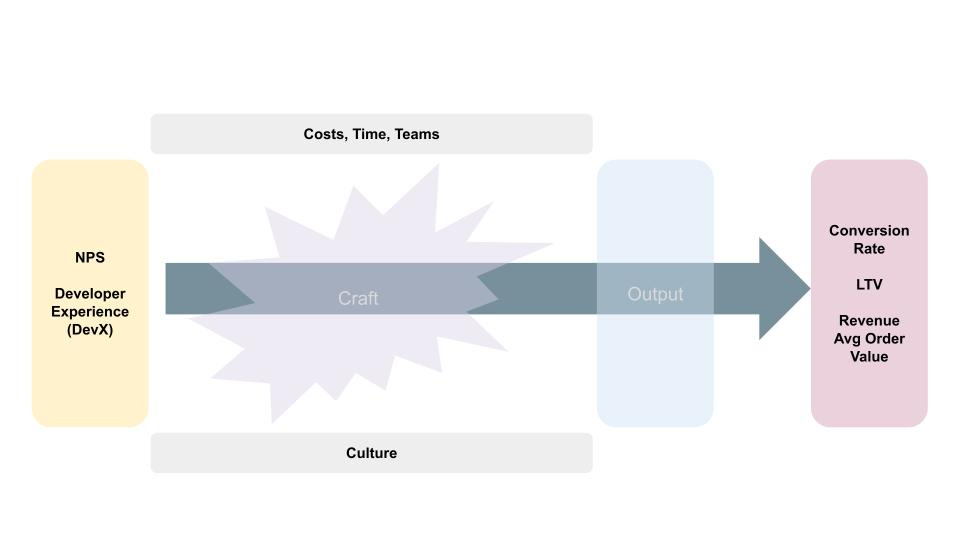

I also personally care about the people involved in these endeavors. I care because if the surgeon, painter, or software developer isn’t happy, they’ll likely go find somewhere else to work or something else to do, which is bad for me personally but also for my organization and the community. I also care because that’s what good leaders do, they care about and for their people. If we care about them we should probably ask them how they are doing periodically. Of course as leaders we should be doing one-on-ones and skip levels but we could also use tools like developer NPS (net promoter scores) or surveys to get a sense of how people and teams are feeling. This type of survey is useful for understanding developer experience. DX is one of the firms in this space that help provide qualitative data on what’s going well and what needs to be improved. I spoke about how I’ve seen this work in some of my past roles if you are interested in listening or reading about that.

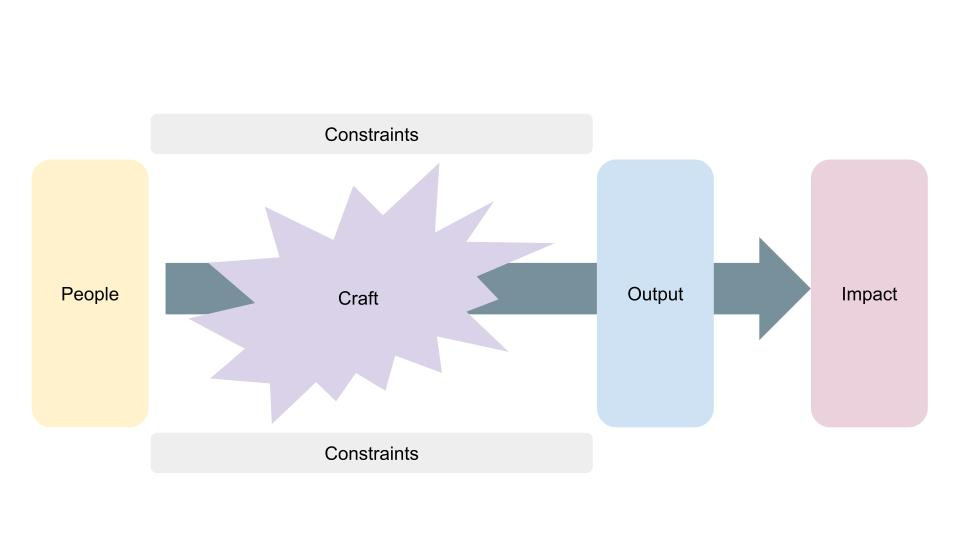

So in my mental model of software development we have people who craft software, producing an output, usually a feature, within some constraints that hopefully makes an impact.

As I mentioned above, we really shouldn’t try to measure the craft. Nobody cares which artist uses the fewest brush strokes. Nobody is interested in how many sutures a surgeon uses, except maybe other surgeons because they are studying patterns and techniques in order to help everyone in their community to get better. Similarly, software engineers and architects might discuss, share, or even debate patterns and designs. We need to stay out of this space with measurements because we aren’t even close to being able to measure anything meaningful yet.

The constraints are worth measuring because as I mentioned in the beginning of this article, I do care how things get done. Sometimes these constraints are cost. We might need our ML models to run in a manner that is ROI positive (has more impact in terms of revenue than cost in terms of hosting expenses). Sometimes, hopefully not often, we might have a time constraint where we absolutely need to launch by a certain date such as when you have a marketing blast on a certain date. Marty Cagan calls these high-integrity commitments and sometimes they are necessary but shouldn’t be the norm. We certainly do care about the culture and that our teammates are working in a manner that reinforces those established principles.

This brings us to the output. I think we should celebrate the output, a round of applause at a demo or a “congratulations!” to an email thread. But, I don’t think we should measure these, primarily because that’s not the job. The job to be done is to make an impact. As I’ve written about before, it’s not uncommon for 85% of new features to be either neutral or negatively affecting the impact metrics. If you measure the output you’re sending the signal that this is in some way the purpose when it is not.

This leaves us with impact which I do think should be the primary, but not only, measurement. There is of course a downside to measuring the impact and most often it’s when you only give one team the credit. This drives the unwanted behavior of competition between teams instead of cooperation. Measure impact but don’t “split the baby” as the proverb warns…give multiple teams credit. There is no business downside for giving multiple teams credit for the same impact. We don’t lose any actual revenue. This to me is a lot like titles or awards, they cost very little but are very meaningful to people. Certainly the value of them lessens if you give them out willy-nilly but they aren’t precious either. One final note on impact measurements, they should be team-based and not used on individuals. As I’ve suggested before, disassociate achieving product goals from employee evaluations, promotions, or raises. When it comes to individual evaluations, promotions, or raises, being part of a successful or unsuccessful team should not be a determining factor. These decisions should be based on demonstrated behaviors and competencies.

So this all leaves us with a model something like this where we are measuring all around the process of software development. We make sure the people are doing well and the development processes are as easy as possible to get work done. Then we measure the constraints that we expect people to work within. And finally, we measure the impact to make sure we’re accomplishing what we expected to accomplish.

I don’t think I’ve added anything new here, just put it in a framework that I can understand and talk about. If this helps please use it to discuss with your other executives or finance teams how you think about measuring developer productivity. Or, make your own framework out of the plethora of excellent content out there. No matter what, have a framework that you feel comfortable deploying because I don’t think there is any turning back from measuring developer productivity.

Excellent piece. Any form of measurement can be seen as setting an incentive, and nearly every incentive can lead to unintended consequences. I recall a conversation during a casual meetup over coffee when an engineer, after a few drinks, boasted about writing fewer than 10 lines of code a year and still drawing a six-figure salary. Essentially, he felt he was being paid to do next to nothing since the company's executives emphasized maintaining system stability by tracking Mean Time Between Failures (MTBF). In essence, if you implemented a change that caused the website to crash, you'd lose your bonus. From an engineering standpoint, the most foolproof way to maintain a flawless MTBF metric is to resist making changes. Introducing such a Key Performance Indicator (KPI) can inadvertently encourage undesired behaviors, such as avoiding code modifications. Jokingly, I asked him how he'd react if the company started monitoring his coding activity. His reply was both disheartening and amusing: "Why not just sprinkle in a few unit tests or add redundant code that's never activated?"

Ultimately, the most reliable gauge of engineering quality might be peer evaluation. Interestingly, during my time as an individual contributor, it was always evident who was genuinely adding value (whether through code reviews or other interactions) and who was merely coasting.

But the challenge remains: What happens when you onboard an enthusiastic fresh graduate eager to contribute, but who, due to inexperience, inadvertently destabilizes the system? Almost all contemporary software systems are intricate, often running into thousands of lines of code.

From a business standpoint, it's easy to see the challenges. Research & Development can be pricey, and determining its return on investment is no simple task. In the end, all measurement mechanisms have their flaws, though some can be helpful temporarily. This underscores the importance of tech-savvy leadership. Drawing from your surgeon analogy, managing a hospital might be more straightforward if the person at the helm possesses a solid medical background.